A desktop PC used to need a lot of different chips to make it work. You had the big parts: the CPU that executed most of your code and the GPU that rendered your pretty 3D graphics. But there were a lot of smaller bits too: a chip called the northbridge handled all communication between the CPU, GPU, and RAM, while the southbridge handled communication between the northbridge and other interfaces like USB or SATA. Separate controller chips for things like USB ports, Ethernet ports, and audio were also often required if this functionality wasn't already integrated into the southbridge itself.

As chip manufacturing processes have improved, it's now possible to cram more and more of these previously separate components into a single chip. This not only reduces system complexity, cost, and power consumption, but it also saves space, making it possible to fit a high-end computer from yesteryear into a smartphone that can fit in your pocket. It's these technological advancements that have given rise to the system-on-a-chip (SoC), one monolithic chip that's home to all of the major components that make these devices tick.

The fact that every one of these chips includes what is essentially an entire computer can make keeping track of an individual chip's features and performance quite time-consuming. To help you keep things straight, we've assembled this handy guide that will walk you through the basics of how an SoC is put together. It will also serve as a guide to most of the current (and future, where applicable) chips available from the big players making SoCs today: Apple, Qualcomm, Samsung, Nvidia, Texas Instruments, Intel, and AMD. There's simply too much to talk about to fit everything into one article of reasonable length, but if you've been wondering what makes a Snapdragon different from a Tegra, here's a start.

Putting a chip together

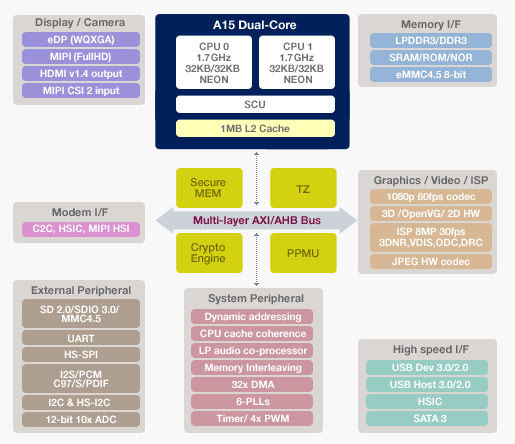

A very simplified look at the layout of Samsung's Exynos 5 Dual. The CPU and GPU are there, but they're just small pieces of the larger puzzle.

There's no discussion of smartphone and tablet chips that can happen without a discussion of ARM Holdings, a British company with a long history of involvement in embedded systems. ARM's processors (and the instruction set that they use, also called ARM) are designed to consume very small amounts of power, much less than the Intel or AMD CPUs you might find at the heart of a standard computer. This is one of the reasons why you see ARM chips at the heart of so many phones and tablets today. To better understand how ARM operates (and to explain why so many companies use ARM's CPU designs and instruction sets), we first must talk a bit about Intel.

Intel handles just about everything about its desktop and laptop CPUs in-house: Intel owns the x86 instruction set its processors use, Intel designs its own CPUs and the vast majority of its own GPUs, Intel manufactures its own chips in its own semiconductor fabrication plants (fabs), and Intel handles the sale of its CPUs to both hardware manufacturers and end users. Intel can do all of this because of its sheer size, but it's one of the only companies able to work this way. Even in AMD's heyday, the company was still licensing the x86 instruction set from Intel. More recently, AMD sold off its own fabs—the company now directly handles only the design and sale of its processors, rather than handling everything from start to finish.

ARM's operation is more democratized by design. Rather than making and selling any of its own chips, ARM creates and licenses its own processor designs for other companies to use in their chips—this is where we get things like the Cortex-A9 and the Cortex-A15 that sometimes pop up in Ars phone and tablet reviews. Nvidia's Tegra 3 and 4, Samsung's Exynos 4 and 5, and Apple's A5 processors are all examples of SoCs that use ARM's CPU cores. ARM also licenses its instruction set for third parties to use in their own custom CPU designs. This allows companies to put together CPUs that will run the same code as ARM's Cortex designs but have different performance and power consumption characteristics. Both Apple and Qualcomm (with their A6 and Snapdragon S4 chips, respectively) have made their own custom designs that exceed Cortex-A9's performance but generally use less power than Cortex-A15.

The situation is similar on the graphics side. ARM offers its own "Mali" series GPUs that can be licensed the same way its CPU cores are licensed, or companies can make their own GPUs (Nvidia and Qualcomm both take the latter route). There are also some companies that specialize in creating graphics architectures. Imagination Technologies is probably the biggest player in this space, and it licenses its mobile GPU architectures to the likes of Intel, Apple, and Samsung, among others.

Chip designers take these CPU and GPU bits and marry them to other necessary components—a memory interface is necessary, and specialized blocks for things like encoding and decoding video and processing images from a camera are also frequent additions. The result is a single, monolithic chip called a "system on a chip" (SoC) because of its more-or-less self-contained nature.

A good example of a "package on package" design that stacks the RAM on top of the rest of the SoC.

A good example of a "package on package" design that stacks the RAM on top of the rest of the SoC.

There are two things that sometimes don't get integrated into the SoC itself. The first is RAM, which is sometimes a separate chip but is often stacked on top of the main SoC to save space (a method called "package-on-package" or PoP for short). A separate chip is also sometimes used to handle wireless connectivity. However, in smartphones especially, the cellular modem is also incorporated into the SoC itself.

While these different ARM SoCs all run the same basic code, there's a lot of variety between chips from different manufacturers. To make things a bit easier to digest, we'll go through all of the major ARM licensees and discuss their respective chip designs, those chips' performance levels, and products that each chip has shown up in. We'll also talk a bit about each chipmaker's plans for the future, to the extent that we know about them, and about the non-ARM SoCs that are slowly making their way into shipping products. Note that this is not intended to be a comprehensive look at all ARM licensees, but rather a thorough primer on the major players in today's and tomorrow's phones and tablets.

Apple

Apple's chips appear exclusively in Apple's phones and tablets, and iOS is optimized specifically for them. This lets Apple get good performance with less RAM and fewer CPU cores than other companies' high-end chips.

We'll tackle Apple's chips first, since they show up in a pretty small number of products and are exclusively used in Apple's products. We'll start with the oldest models first and work our way up.

The Apple A4 is the oldest chip still used by current Apple products, namely the fourth generation iPod touch and the free-with-contract iPhone 4. This chip marries a single Cortex A8 CPU core to a single-core PowerVR SGX 535 GPU and either 256MB or 512MB of RAM (for the iPod and iPhone, respectively). This chip was originally introduced in early 2010 with the original iPad, so it's quite long in the tooth by SoC standards. Our review of the fifth generation iPod touch shows just how slow this thing is by modern standards, though Apple's tight control of iOS means that it can be optimized to run reasonably well even on old hardware (the current version of iOS runs pretty well on the nearly four-year-old iPhone 3GS).

Next up is the Apple A5, which despite being introduced two years ago is still used in the largest number of Apple products. The still-on-sale iPad 2, the iPhone 4S, the fifth-generation iPod touch, and the iPad mini all have the A5 at their heart. This chip combines a dual-core Cortex A9 CPU, a dual-core PowerVR SGX 543MP2 GPU, and 512MB of RAM. Along with the aforementioned heavy optimization of iOS, this combination has made for quite a longevous SoC. The A5 also has the greatest number of variants of any Apple chip: the A5X used the same CPU but included the larger GPU, 1GB of RAM, and wider memory interface necessary to power the third generation iPad's then-new Retina display, and a new variant with a single-core CPU was recently spotted in the Apple TV.

Finally, the most recent chip: the Apple A6. This chip, which to date has appeared only in the iPhone 5, marries two of Apple's custom-designed "Swift" CPU cores to a triple-core Imagination Technologies PowerVR SGX 543MP3 GPU and 1GB of RAM, roughly doubling the performance of the A5 in every respect. The CPU doubles the A5's performance both by increasing the clock speed and the number of instructions-per-clock the chip can perform relative to Cortex A9. The GPU gets there by adding another core and increasing clock speeds. As with the A5, the A6 has a special A6X variant used in the full-sized iPad that uses the same dual-core CPU but ups the ante in the graphics department with a quad-core PowerVR SGX 554MP4 and a wider memory interface.

Enlarge / The "die shot" of Apple's A6, as done by Chipworks. They've highlighted the CPU and GPU cores, but there are lots of other components that make up an

Apple SoCs all prioritize graphics performance over everything else, both to support the large number of games available for the platform and to further Apple's push toward high-resolution display panels. The chips tend to have less CPU horsepower and RAM than the chips used in most high-end Android phones (Apple has yet to ship a quad-core CPU, opting instead to push dual-core chips), but tight control over iOS makes this a non-issue. Apple has a relative handful of iOS devices it needs to support, so it's trivial for Apple and third-party developers to make whatever tweaks and optimizations they need to keep the operating system and its apps running smoothly even if the hardware is a little older. Whatever you think of Apple's policies and its "walled garden" approach to applications, this is where the tight integration between the company's hardware and software pays off.

Knowing what we do about Apple's priorities, we can make some pretty good educated guesses about what we'll see in a hypothetical A7 chip even if the company never gives details about its chips before they're introduced (or even after, since we often have to rely on outfits like Chipworks to take new devices apart before we can say for sure what's in them).

On the CPU side, we'd bet that Apple will focus on squeezing more performance out of Swift, whether by improving the architecture's efficiency or increasing the clock speed. A quad-core version is theoretically possible, but to date Apple has focused on fewer fast CPU cores rather than more, slower ones, most likely out of concern about power consumption and the total die size of the SoC (the larger the chip, the more it costs to produce, and Apple loves its profit margins). As for the GPU, Imagination's next-generation PowerVR SGX 6 series GPUs are right around the corner. Since Apple has used Imagination exclusively in its custom chips up until now, it's not likely to rock this boat.

Qualcomm

Qualcomm CEO Paul Jacobs introduces the Snapdragon 800 series SoCs at CES 2013.

Qualcomm is hands-down the biggest player in the mobile chipmaking game right now. Even Samsung, a company that makes and ships its own SoCs in the international versions of its phones, often goes with Qualcomm chips in the US. With this popularity comes complexity: Wikipedia lists 19 distinct model numbers in the Snapdragon S4 lineup alone, and those aren't even Qualcomm's newest chips. So we'll pick four of the most prominent to focus on, since these are the ones you're most likely to see in a device you could buy in the next year or so.

Let's start with the basics: Qualcomm is the only company on our list that creates both its own CPU and GPU architectures, rather than licensing one or the other design from ARM or another company. Its current CPU architecture, called "Krait," is faster clock-for-clock than ARM's Cortex A9 but slower than Cortex A15 (the upside is that it's also more power-efficient than A15). Its GPU products are called "Adreno," and they actually have their roots in a mobile graphics division that Qualcomm bought from AMD back in 2009 for a scant $65 million. Both CPU and GPU tend to be among the faster products on the market today, which is one of the reasons why they're so popular.

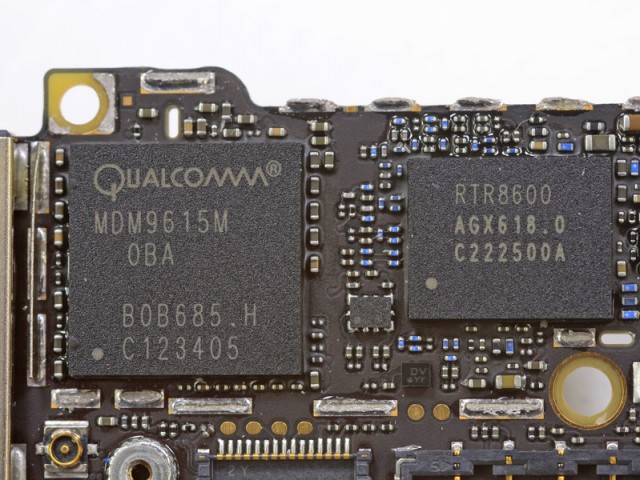

The real secret to Qualcomm's success, though, is its prowess in cellular modems. For quite a while, Qualcomm was the only company offering chips with an LTE modem integrated into the SoC itself. Plenty of phones make room for separate modems and SoCs, but integrating the modem into the SoC creates space on the phone's logic board, saves a little bit of power, and keeps OEMs from having to buy yet another chip. Even companies that make their own chips use Qualcomm modems—as we noted, almost all of Samsung's US products come with a Qualcomm chip, and phones like the BlackBerry Z10 use a Qualcomm chip in the US even though they use a Texas Instruments chip abroad. Even Apple's current iPhones use one or another (separate) Qualcomm chips to provide connectivity.

Qualcomm's modems are key to its success. Here is the standalone MDM9615M modem that enables the iPhone 5's 4G connectivity.

Add these modems to Qualcomm's competitive CPUs and GPUs, and it's no wonder why the Snapdragon has been such a success for the company. Qualcomm will finally start to see some real challenge on this front soon: Broadcom, Nvidia, and Intel are all catching up and should be shipping their own LTE modems this year, but for now Qualcomm's solutions are established and mature. Expect Qualcomm to continue to provide connectivity for most devices.

Let's get to the Snapdragon chips themselves, starting with the oldest and working our way up. Snapdragon's S4 Plus, particularly the highest-end model (part number MSM8960), combines two Krait cores running at 1.7GHz with an Adreno 225 GPU. This GPU is roughly comparable to the Imagination Technologies GPU in Apple's A5, while the Krait CPU is somewhere between the A5 and the A6. This chip is practically everywhere: it powers high-end Android phones from a year or so ago (the US version of Samsung's Galaxy S III) as well as high-end phones from other ecosystems (Nokia's Lumia 920 among many other Windows phones, plus BlackBerry's Z10). It's still a pretty popular choice for those who want to make a phone but don't want to spend the money (or provide the larger battery) for Qualcomm's heavy-duty quad-core SoCs. Look for the S4 Plus series to be replaced in mid-range phones by the Snapdragon 400 series chips, which combine the same dual-core Krait CPU with a slightly more powerful Adreno 305 GPU (the HTC First is the first new midrange phone to use it. Others will likely follow).

Next up is the Snapdragon S4 Pro (in particular, part number APQ8064). This chip combines a quad-core Krait CPU with a significantly beefed up Adreno 320 GPU. Both CPU and GPU trade blows with Apple's A6 in our standard benchmarks, but the CPU is usually faster as long as all four of its cores are actually being used by your apps. This chip is common in high-end phones released toward the end of last year, including such noteworthy models as LG's Optimus G, the Nexus 4, and HTC's Droid DNA. It's powerful, but it can get a little toasty: if you've been running the SoC full-tilt for a while, the Optimus G's screen brightness will automatically turn down to reduce the heat, and the Nexus 4 will throttle the chip and slow down if it's getting too hot.

The fastest, newest Qualcomm chip that's actually showing up in phones now is the Snapdragon 600, a chip Qualcomm unveiled at CES back in January. Like the S4 Pro, this Snapdragon features a quad-core Krait CPU and Adreno 320 GPU, but that doesn't mean they're the same chip. The Krait in the Snapdragon 600 is a revision called "Krait 300" that both runs at a higher clock speed than the S4 Pro's Krait (1.9GHz compared to 1.7GHz) and includes a number of architectural tweaks that make it faster than the original Krait at the same clock speed. The Snapdragon 600 will be coming to us in high-end phones like the US version of Samsung's Galaxy S4, HTC's One, and LG's Optimus G Pro. Our benchmarks for the latter phone show the Snapdragon 600 outdoing the S4 Pro by 25 to 30 percent in many tests, which is a sizable step up (though the Adreno 320 GPU is the same in both chips).

Finally, look ahead to the future and you'll see the Snapdragon 800, Qualcomm's next flagship chip that's due in the second quarter of this year. This chip's quad-core Krait 400 CPU again introduces a few mild tweaks that should make it faster clock-for-clock than the Krait 300, and it also runs at a speedier 2.3GHz. The chip sports an upgraded Adreno 330 GPU that supports a massive 3840×2160 resolution as well as a 64-bit memory interface (everything we've discussed up until now has used a 32-bit interface). All of this extra hardware suggests that this chip is destined for tablets rather than smartphones (a market segment where Qualcomm is less prevalent), but this doesn't necessarily preclude its use in high-end smartphones. We'll know more once the first round of Snapdragon 800-equipped devices are announced.

Qualcomm is in a good position. Its chips are widely used, and its roadmap evolves at a brisk and predictable pace. Things may look less rosy for the company when competing LTE modems start to become more common, but for now it's safe to say that most of the US' high-end phones are going to keep using Qualcomm chips.

Samsung

Samsung usually uses its own chips in its own phones and tablets, but not in the US.

Samsung has three-or-so chips that are currently shipping in its phones and tablets. The first (and oldest) of the three is the Exynos 4 Quad, which powers the Galaxy Note 10.1, Galaxy Note 8.0, Galaxy Note II, and international versions of the Galaxy S III. This particular variant includes four Cortex A9 CPU cores and an ARM Mali-400 GPU. Neither is cutting edge, but the GPU performance is better than Nvidia's Tegra 3 and the CPU performance is fairly similar (given similar clock speeds, anyway).

The other chips are both from the Exynos 5 series, but they're both quite different from each other. The first is the relatively straightforward Exynos 5 Dual, which powers both the Nexus 10 tablet and Samsung's $249 ARM Chromebook. This chip combines two ARM Cortex A15 cores with ARM's Mail-T604 GPU, and the result is the fastest GPU performance in any Android tablet at the moment and the fastest CPU performance in any ARM-based device, period. (This will quickly stop being the case as other A15-based devices start hitting the market this year). The chip is a bit more power-hungry than its Cortex A9-based predecessor and other designs from Apple and Qualcomm, but manufacturing process advancements absorb most of this penalty and Exynos 5 Dual devices still end up with decent battery life overall.

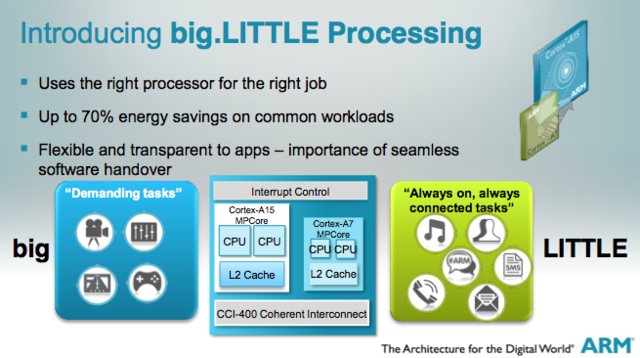

Finally, we have the Exynos 5 Octa, which is coming to market first in the international version of the forthcoming Galaxy S 4. This SoC is generally said to have eight CPU cores, and while this is not technically untrue, we've already pointed out that not all of these cores are created equal. The SoC combines four Cortex A15 cores for performance and four Cortex A7 cores that can run all of the same code, but much more slowly. Tasks that don't need a ton of CPU power can execute on the A7 cores, and tasks that do can execute on the A15s, but it's unlikely that all eight cores can be active at the same time. This chip's maximum CPU performance, then, will be more in line with a quad-core Cortex A15 chip like Nvidia's Tegra 4.

The Octa also ditches ARM's GPU designs for one by Imagination Technologies, a triple-core PowerVR SGX 544MP3. This is nearly identical to the 543MP3 used in Apple's A6, and the performance should be very similar. The only difference is that the 544MP3 supports Direct3D, a necessity if the Octa is to make its way into Windows phones or Windows RT tablets. Apple's competitors in the chip space are finally beginning to catch up with their GPU performance, something we couldn't have said of many chips even a year ago.

Samsung's Exynos 5 Octa uses a CPU core arrangement called "big.LITTLE" to save power.

Samsung's chips have been known to appear in products from other companies, but they ship most frequently in Samsung's own phones, tablets, and (more recently) laptops. Samsung has the advantage of being a more integrated company than many of its competitors—not only does it make and sell its own phones and tablets, it also manufactures many of the components that appear in those devices, including the screens and the chips themselves. Nvidia and Qualcomm both typically outsource their chip production to TSMC, a company that also handles GPU production for AMD and Nvidia. Meanwhile, Apple (Samsung's biggest competitor in the mobile market) relies on Samsung for the production of the A5 and A6 chips that power its iOS devices.

Texas Instruments

Texas Instruments is an odd duck in this discussion. On the one hand, it provides chips for many prominent devices past and present, including Amazon's entire Kindle Fire, Samsung's Galaxy S II (and several other pre-Galaxy S III Samsung devices), and the international version of the BlackBerry Z10. On the other hand, TI has announced that it is exiting the market for smartphone and tablet SoCs and will be focusing on less-competitive, higher-margin markets—think embedded systems and factories. That doesn't mean it will be leaving the consumer market all of a sudden, just that it won't be devoting resources to new chips, and its existing chips will become more and more rare as time goes on.

The most common TI chips you'll find in products today belong to the OMAP4 series, which consists of three chips: the OMAP4430, the OMAP4460, and the OMAP4470. All use a dual-core Cortex A9 CPU (the higher the model number is, the higher the clock speed) alongside a single-core Imagination Technologies PowerVR SGX540 (in the 4430 and 4460) and a single-core PowerVR SGX544 (in the 4470). Two low-power ARM Cortex M3 cores are also included to help process background tasks while eating less battery.

The OMAP4's CPU performance is lower than the newer chips from Qualcomm or Nvidia, but like Apple's A5 it's generally good enough, especially when paired with Jelly Bean (or something likeBlackBerry 10, which is optimized for it). The GPU performance, however, often lags behind not just newer chips, but also contemporaneous chips like the A5 or Nvidia's Tegra 3 (especially in the lower-end chips).

TI has one more consumer-targeted design in its pipeline, and it will probably be its last: the OMAP5. It uses the same basic setup as OMAP4, but everything has been upgraded: the two Cortex A9s have been exchanged for A15s, the Cortex M3s have been exchanged for M4s, and the GPU has been bumped to a dual-core PowerVR SGX544MP2 rather than the single-core version (the GPU's clock speed has also been increased to 532MHz, a little less than twice as fast as the PowerVR SGX544 in the OMAP4470). This should all add up to a GPU that's between three and four times as fast as its predecessor, always a welcome improvement.

OMAP5 is reportedly due in the second quarter of this year—so any day now. Even so, we haven't heard much about devices that will be using it. This silence may be because the product isn't actually on the market yet, but it may be the case that TI's anticipated withdrawal from the market has killed any chance this chip had to succeed. TI will probably be willing to cut buyers some pretty good deals, but if I had the option to buy a chip from a company with a well-charted roadmap (like Qualcomm or Nvidia) and a company that has announced its intent to completely abandon the consumer market, I know which one I'd choose.

Nvidia

Nvidia's "Kayla" platform is a Tegra-equipped motherboard aimed at developers.

The Tegra 3 is Nvidia's current SoC, and though it's getting a bit long in the tooth, it's still showing up in some relatively high-profile products. The chip uses four ARM Cortex A9 CPU cores and a custom-designed GPU made by Nvidia, which makes sense given its history as a graphics company. The SoC also includes a fifth low-power CPU core called a "companion core" designed to perform background tasks when your phone or tablet is idle, allowing the main CPU cores to power down and save your battery. There are a few different Tegra 3 variants, and they differ mostly in clock speed and memory bandwidth rather than core count.

The Tegra 3's CPU performs reasonably well, though at this point a quad-core Cortex A9 is going to feel slower than a dual-core CPU based on a newer architecture like the Cortex A15 simply because there aren't that many heavily threaded apps on phones and tablets these days. The GPU has also been surpassed by other offerings from Qualcomm, Apple, and Samsung, though the games actually available for Android today can usually be played without issue.

The Tegra 3 isn't as prevalent in phones and tablets as Qualcomm's chips, but it still powers plenty of Android and Windows RT devices. The Nexus 7, HTC One X+, Microsoft Surface, Asus VivoTab RT, and Asus Transformer Prime are all prominent devices using Nvidia silicon. The Ouya game console also uses a Tegra 3.

Tegra 3's successor is (unsurprisingly) called the Tegra 4, and the first devices to use it will be coming out in the next few months. Nvidia's own Project Shield gaming console will be one of the earliest to use it, but Vizio and Toshiba have both announced tablets that will use the chip as well. Tegra 4 uses the same basic configuration of CPU cores as Tegra 3—four cores, plus a low-power "companion core"—but trades the Cortex A9s for much more powerful Cortex A15s. The GPU is also much-improved and should go toe-to-toe with the GPU in Apple's iPad 4.

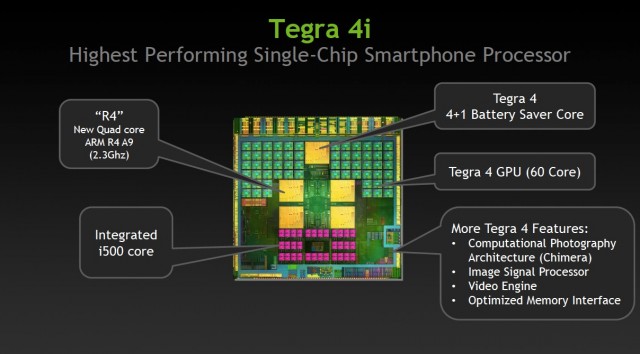

Tegra 4i is a smaller, more smartphone-centric version of Tegra 4.

Tegra 4 is aimed at tablets and the very highest-end smartphones, but Nvidia is going a different route for mainstream smartphones. The Tegra 4i, due toward the end of this year, has the same basic GPU architecture as Tegra 4, but it uses a narrower memory interface (32-bit as opposed to 64-bit) and fewer cores (60 instead of 72). The CPU is also a little weaker—like Tegra 3, it's comes with four Cortex A9 CPU cores and one "companion core," but it's based on a revision of Cortex A9 called "Cortex A9 R4." The R4 promises higher performance than Cortex A9 at the same clock speed. Maximum clock speeds have also been increased significantly over Tegra 3, from 1.7GHz to 2.3GHz.

What will help Tegra 4i the most is the presence of an integrated LTE modem, the Icera i500. We've already talked about the benefits of having a modem integrated directly into the SoC itself, but this one has some unique aspects. The i500 is a "soft modem," which means that instead of having bits and pieces dedicated to communicating over specific bands or with specific standards, it has some general-purpose hardware that can be programmed to communicate over any of them as long as the rest of the hardware supports it. In theory, this would remove the need to build different models of a phone to serve different markets or different carriers. Both Tegra 4 and Tegra 4i also include a new imaging technology called "Chimera" that allows for always-on, real-time HDR photographs without the lag and blurriness that affects current HDR implementations.

Neither Tegra 4 variant is here yet, but that hasn't stopped Nvidia from talking about its plans for the more distant future. "Logan," a successor to Tegra 4 due in 2014, will use the same "Kepler" GPU architecture as Nvidia's current GeForce GPUs. Aside from the accompanying performance increases, this opens the door to GPU-assisted computing, which can be quite useful in workstation and server applications. Finally, 2015's "Parker" will incorporate Nvidia's first custom-designed ARM CPU, marking a move away from ARM's stock designs.

Nvidia's biggest challenge with all of these chips is going to be breaking into a market that others have largely cornered. Tegra 3 has made some inroads for them, but the biggest smartphone and tablet manufacturers (Apple and Samsung) already make their own chips, and (in the US at least) Qualcomm tends to be the go-to choice for most others. Still, with Texas Instruments leaving the market, we may soon see prominent companies that use its OMAP chips (Amazon, among many others) looking for an alternative. Nvidia can capitalize on this opening, especially if it can undercut Qualcomm on price (and according to Nvidia representatives I've spoken with, this is indeed the case).

Intel and AMD: x86 struggles to make the jump

Intel hasn't made a perfect tablet chip yet, but systems like the ThinkPad Tablet 2 show promise.

We've talked almost exclusively about ARM-based products so far, but Intel, the 500-pound gorilla of the PC market, is still fighting to establish a reputation for making good tablet chips. Intel's current-generation products, the Ivy Bridge CPU architecture on the high end and the Clover Trail Atom platform on the low end, can't quite hit that necessary sweet spot between performance and power efficiency. Ivy Bridge tablets like Acer's Iconia W700 are still a little hot, a little heavy, a little expensive, and get only OK battery life. Clover Trail devices like Lenovo's ThinkPad Tablet 2 address all of these concerns, but their CPU and GPU performance is relatively low (GPU performance is especially bad) and the platform doesn't support Android.

Intel gets more interesting this year. Its Haswell chips should enable thinner, lighter tablets with better battery life than the Ivy Bridge models, while both the Clover Trail+ and Bay Trail Atom platforms look to deliver substantial gains in both CPU and GPU performance (Intel's cellular modems are also steadily improving, which helps). Intel's long-established relationships with the PC OEMs will ensure that both of these chips' architectures find their way into plenty of tablets, but we're still waiting for an Intel-powered smartphone to make its way to the US—so far, most Intel phones have been targeted toward "emerging markets."

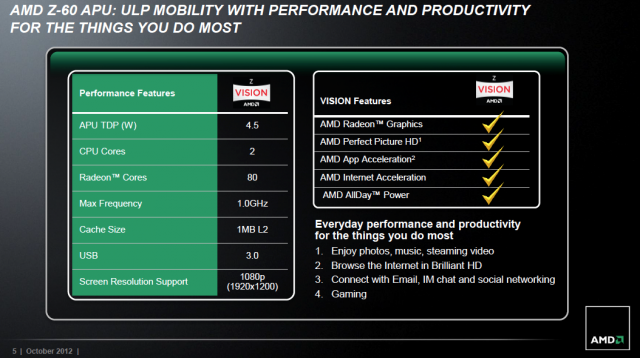

AMD has also made a few moves in this direction: it has adapted its Bobcat netbook architecture into something called Hondo, which combines a dual-core CPU with an integrated Radeon GPU. By all reports, the CPU is in the same ballpark as Clover Trail's (the architecture is faster clock-for-clock, but Hondo runs at a lower clock speed than Clover Trail), while the GPU is a substantial step up. One of our main issues with Clover Trail tablets is that their GPUs deliver sometimes choppy UI and gaming performance, so improvements on this front are more than welcome.

AMD's "Hondo" chip checks most of the important boxes, but not many tablet makers are using it.

No matter what the chip's virtues, though, its main problem is that most OEMs just aren't picking up what AMD is putting down. At our first Hondo briefing back in October of 2012, AMD played coy when asked about which devices Hondo would appear in. Since then, only two have been announced: one Windows 8 tablet apiece from Fujitsu and TV-turned-PC-maker Vizio. Bigger names are conspicuous in their absence, and unless AMD can develop a more convincing roadmap and get more people on board, it seems unlikely that its chips will amount to much.

AMD's first ARM processors are also coming in 2014, but they're targeted toward servers and not the consumer market. This (plus a number of recent hires) suggests that AMD could be looking to get into the ARM SoC game (and it could certainly handle the GPU despite selling its last mobile GPU division to Qualcomm, a move that seems short-sighted in retrospect). For now, its efforts remain focused squarely on the server room.

All of these chips have one potential trump card over the ARM chips we've talked about: x86. How important this architecture is to you will depend entirely on what you do: if you're a heavy user of Windows 8 or Windows desktop applications, x86 is a must-have because the ARM-based Windows RT can't run any of that stuff. If you prefer your tablets to be Android-flavored, Intel in particular has done a lot of work with Google to optimize Android for x86, and every Intel-powered Android phone or tablet we've seen has indeed performed pretty smoothly. Intel has also created something called "binary translation" to run most apps from the Google Play store without requiring much (if any) extra work on the part of the developers. Still, Android doesn't need x86 like Windows does, and if you're trying to build something on the cheap, Intel probably isn't your best option.

On Intel's end, the theory is that its manufacturing expertise will eventually outstrip its competitors' byso much that it will enable it to cram more performance into a smaller, more power-efficient chip. This is one possible outcome, though I think that companies like Apple and Samsung are going to be slow to move away from using their own chips in most of their mobile devices. If they can keep with performance that's "good enough," sticking with their own products might still be preferable to paying Intel for tablet and phone chips as they have for desktop and laptop chips for so long.

Where the market is going

There are other chipmakers in the world, but this has been a reasonably comprehensive look at the current offerings that you're most likely to see in most mid-to-high-end smartphones or tablets within the next year or so. Now that we've covered the products and their performance relative to each other, let's look at the market itself and the direction things seem to be going.

First, despite the number of players, the market for third-party chips is deceptively small. Look at Apple and Samsung, by far the most successful smartphone and tablet companies—Samsung often uses Qualcomm chips in its US phones, but otherwise both companies build and ship their own chips in their own products. Especially in Apple's case, this keeps a large, lucrative chunk of the market out of reach for companies that make only chips. Qualcomm, Nvidia, and the others have to fight it out for the rest.

As we've already discussed, Qualcomm is by far the largest third-party chipmaker in this game, and it has arrived at that position by delivering chips with good performance and versatile modems. It's the go-to choice for most Android and Windows Phone handset makers—currently, its quad-core chips are popular in the highest-end phones, while midrange phones like the HTC First can go with the slightly older, cheaper, but still dependable dual-core models. If you want to get your chips in your phones, Qualcomm is who you're fighting, if only because it's the biggest company you can fight.

That's exactly what Nvidia is trying to do with the Tegra 4i and its integrated Icera i500 modem: present a cheaper, all-in-one competitor to Qualcomm's mid-range and high-end products. Nvidia's biggest issue is actually similar to AMD's—it may be having some trouble convincing OEMs to use its new products. With Tegra 2 and Tegra 3, there's an impression that the company over-promised and under-delivered on things like performance and power consumption. Though it's early days yet for Tegra 4, we're still looking at a pretty short list of products that are confirmed to be using it, and they're all from pretty minor players. Everything I've seen so far about Tegra 4 (though admittedly seen through PR's rose-colored glasses) has been good, and TI's withdrawal from the market could be Nvidia's chance to snap up some new business.

Ultimately, TI's withdrawal shows how rough this market can be for any company that isn't Qualcomm. If the company that provides chips for the Kindle Fire—one of the highest-profile, most successful Android tablets, even if our reviews of them have always been middling—can't make enough to justify continuing on, that's probably a bad sign for anyone else who's looking to break in. One reason that SoCs have gotten so much faster so quickly is because the competition has been fierce and the potential rewards have been big. For now, this continues to be true—let's hope it stays that way.

No comments:

Post a Comment

Let us know your Thoughts and ideas!

Your comment will be deleted if you

Spam , Adv. Or use of bad language!

Try not to! And thank for visiting and for the comment

Keep visiting and spread and share our post !!

Sharing is a kind way of caring!! Thanks again!