Facebook's Open Vault storage server, codenamed "Knox."

Nearly two years ago, Facebook unveiled what it called the Open Compute Project. The idea was to share designs for data center hardware like servers, storage, and racks so that companies could build their own equipment instead of relying on the narrow options provided by hardware vendors.

While anyone could benefit, Facebook led the way in deploying the custom-made hardware in its own data centers. The project has now advanced to the point where all new servers deployed by Facebook have been designed by Facebook itself or designed by others to Facebook's demanding specifications. Custom gear today takes up more than half of the equipment in Facebook data centers. Next up, Facebook will open a 290,000-square-foot data center in Sweden stocked entirely with servers of its own design, a first for the company.

"It's the first one where we're going to have 100 percent Open Compute servers inside," Frank Frankovsky, VP of hardware design and supply chain operations at Facebook, told Ars in a phone interview this week.

Like Facebook's existing data centers in North Carolina and Oregon, the one coming online this summer in Luleå, Sweden will have tens of thousands of servers. Facebook also puts its gear in leased data center space to maintain a presence near users around the world, including at 11 colocation sites in the US. Various factors contribute to the choice of locations: taxes, available technical labor, the source and cost of power, and the climate. Facebook doesn't use traditional air conditioning, instead relying completely on "outside air and unique evaporative cooling system to keep our servers just cool enough," Frankovsky said.

Saving money by stripping out what you don’t need

At Facebook's scale, it's cheaper to maintain its own data centers than to rely on cloud service providers, he noted. Moreover, it's also cheaper for Facebook to avoid traditional server vendors.

Like Google, Facebook designs its own servers and has them built by ODMs (original design manufacturers) in Taiwan and China, rather than OEMs (original equipment manufacturers) like HP or Dell. By rolling its own, Facebook eliminates what Frankovsky calls "gratuitous differentiation," hardware features that make servers unique but do not benefit Facebook.

It could be as simple as the plastic bezel on a server with a brand logo, because that extra bit of material forces the fans to work harder. Frankovsky said a study showed a standard 1U-sized OEM server "used 28 watts of fan power to pull air through the impedance caused by that plastic bezel," whereas the equivalent Open Compute server used just three watts for that purpose.

A Facebook-designed server rack.

What else does Facebook strip out? Frankovsky said that "a lot of motherboards today come with a lot of management goop. That's the technical term I like to use for it." This goop could be HP's integrated lifecycle management engine or Dell's remote server management tools.

Those features could well be useful to many customers, particularly if they have standardized on one vendor. But at Facebook's size, it doesn't make sense to rely on one vendor only, because "a design fault might take a big part of your fleet down or because a part shortage could hamstring your ability to deliver product to your data centers."

Facebook has its own data center management tools, so the stuff HP or Dell makes is unnecessary. A vendor product "comes with its own set of user interfaces, set of APIs, and a pretty GUI to tell you how fast fans are spinning and some things that in general most customers deploying these things at scale view as gratuitous differentiation," Frankovsky said. "It's different in a way that doesn't matter to me. That extra instrumentation on the motherboard, not only does it cost money to purchase it from a materials perspective, but it also causes complexity in operations."

A path for HP and Dell: Adapt to Open Compute

That doesn't mean Facebook is swearing off HP and Dell forever. "Most of our new gear is built by ODMs like Quanta," the company said in an e-mail response to one of our follow-up questions. "We do multi-source all our gear, and if an OEM can build to our standards and bring it in within 5 percent, then they are usually in those multi-source discussions."

HP and Dell have begun making designs that conform to Open Compute specifications, and Facebook said it is testing one from HP to see if it can make the cut. The company confirmed, though, that its new data center in Sweden will not include any OEM servers when it opens.

Facebook says it gets 24 percent financial savings from having a lower-cost infrastructure, and it saves 38 percent in ongoing operational costs as a result of building its own stuff. Facebook's custom-designed servers don't run different workloads than any other server might—they just run them more efficiently.

"An HP or Dell server, or Open Compute server, they can all generally run the same workloads," Frankovsky said. "It's just a matter of how much work you get done per watt per dollar."

Facebook doesn't virtualize its servers, because its software already consumes all the hardware resources, meaning virtualization would result in a performance penalty without a gain in efficiency.

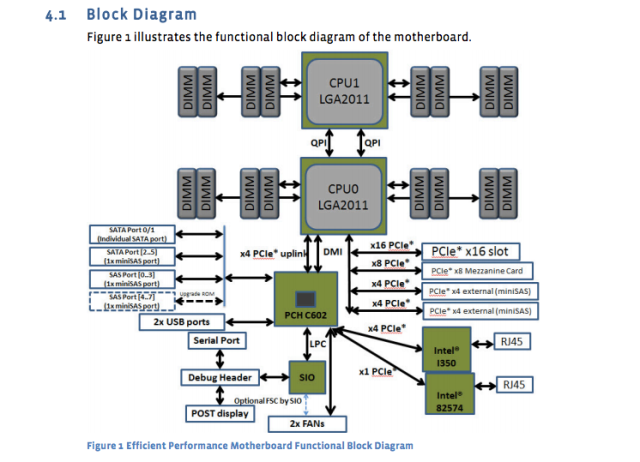

The social media giant has published the designs and specs of its own servers, motherboards, and other equipment. For example, the "Windmill" motherboard uses two Intel Xeon E5-2600 processors, with up to eight cores per CPU.

Facebook's spec sheet breaks it down:

And now a diagram of the motherboard:

Those motherboard specs were published nearly a year ago, but they are still the standard. Newly designed database server "Dragonstone" and Web server "Winterfell" rely on the Windmill motherboard, although newer Intel CPUs might hit production at Facebook later this year.

Winterfell servers.

Facebook's server designs are tailored to different tasks. As The Register's Timothy Prickett Morgan reported last month, certain database functions at Facebook require redundant power supplies, whereas other tasks can be handled by servers with multiple compute nodes sharing a single power supply.

Data centers use a mix of flash storage and traditional spinning disks, with flash serving up Facebook functionality requiring the quickest available speeds. Database servers use all flash. Web servers typically have really fast CPUs, with relatively low amounts of storage and RAM. 16GB is a typical amount of RAM, Frankovsky said. Intel and AMD chips both have a presence in Facebook gear.

And Facebook is burdened with lots of "cold storage," stuff written once and rarely accessed again. Even there, Frankovsky wants to increasingly use flash because of the failure rate of spinning disks. With tens of thousands of devices in operation, "we don't want technicians running around replacing hard drives," he said.

Data center-class flash is typically far more expensive than spinning disks, but Frankovsky says there may be a way to make it worth it. "If you use the class of NAND [flash] in thumb drives, which is typically considered sweep or scrap NAND, and you use a really cool kind of controller algorithm to characterize which cells are good and which cells are not, you could potentially build a really high-performance cold storage solution at very low cost," he said.

Taking data center flexibility to the extreme

Frankovsky wants designs so flexible that individual components can be swapped out in response to changing demand. One effort along that line is Facebook's new "Group Hug" specification for motherboards, which could accommodate processors from numerous vendors. AMD and Intel, as well as ARM chip vendors Applied Micro and Calxeda, have already pledged to support these boards with new SoC (System on Chip) products.

That was one of several news items that came out of last month's Open Compute Summit in Santa Clara, CA. In total, the announcements point to a future in which customers can "upgrade through multiple generations of processors without having to replace the motherboards or the in-rack networking," Frankovsky noted in a blog post.

Calxeda came up with an ARM-based server board that can slide into Facebook's Open Vault storage system, codenamed "Knox." "It turns the storage device into a storage server and eliminates the need for a separate server to control the hard drive," Frankovsky said. (Facebook doesn't use ARM servers today because it requires 64-bit support, but Frankovsky says "things are getting interesting" in ARM technology.)

Intel also contributed designs for a forthcoming silicon photonics technology that will allow 100Gbps interconnects, 10 times faster than the Ethernet connections Facebook uses in its data centers today. With the low latency enabled by that kind of speed, customers might be able to separate CPUs, DRAM, and storage into different parts of the rack and just add or subtract components instead of entire servers when needed, Frankovsky said. In this scenario, multiple hosts could share a flash system, improving efficiency.

Despite all these custom designs coming from outside the OEM world, HP and Dell aren't being completely left behind. They have adapted to try to capture some customers who want the flexibility of Open Compute designs. A Dell executive delivered one of the keynotes at this year's Open Compute Summit, and both HP and Dell last year announced "clean-sheet server and storage designs" that are compatible with the Open Compute Project's "Open Rack" specification.

In addition to being good for Facebook, Frankovsky hopes Open Compute will benefit server customers in general. Fidelity and Goldman Sachs are among those using custom designs tuned to their workloads as a result of Open Compute. Smaller customers might be able to benefit too, even if they rent space from a data center where they can't change the server or rack design, he said. They could "take building blocks [of Open Compute] and restructure them into physical designs that fit into their server slots," Frankovsky said.

"The industry is shifting and changing in a good way, in favor of consumers, because of Open Compute," he said.

No comments:

Post a Comment

Let us know your Thoughts and ideas!

Your comment will be deleted if you

Spam , Adv. Or use of bad language!

Try not to! And thank for visiting and for the comment

Keep visiting and spread and share our post !!

Sharing is a kind way of caring!! Thanks again!