It's hard to believe that just a few decades ago, touchscreen technology could only be found in science fiction books and film. These days, it's almost unfathomable how we once got through our daily tasks without a trusty tablet or smartphone nearby, but it doesn't stop there. Touchscreens really are everywhere. Homes, cars, restaurants, stores, planes, wherever—they fill our lives in spaces public and private.

It took generations and several major technological advancements for touchscreens to achieve this kind of presence. Although the underlying technology behind touchscreens can be traced back to the 1940s, there's plenty of evidence that suggests touchscreens weren't feasible until at least 1965. Popular science fiction television shows like Star Trek didn't even refer to the technology until Star Trek: The Next Generation debuted in 1987, almost two decades after touchscreen technology was even deemed possible. But their inclusion in the series paralleled the advancements in the technology world, and by the late 1980s, touchscreens finally appeared to be realistic enough that consumers could actually employ the technology into their own homes.

This article is the first of a three-part series on touchscreen technology's journey to fact from fiction. The first three decades of touch are important to reflect upon in order to really appreciate the multitouch technology we're so used to having today. Today, we'll look at when these technologies first arose and who introduced them, plus we'll discuss several other pioneers who played a big role in advancing touch. Future entries in this series will study how the changes in touch displays led to essential devices for our lives today and where the technology might take us in the future. But first, let's put finger to screen and travel to the 1960s.

1960s: The first touchscreen

Historians generally consider the first finger-driven touchscreen to have been invented by E.A. Johnson in 1965 at the Royal Radar Establishment in Malvern, United Kingdom. Johnson originally described his work in an article entitled "Touch display—a novel input/output device for computers"published in Electronics Letters. The piece featured a diagram describing a type of touch screen mechanism that many Smartphones use today—what we now know as capacitive touch. Two years later, Johnson further expounded on the technology with photographs and diagrams in "Touch Displays: A Programmed Man-Machine Interface," published in Ergonomics in 1967.

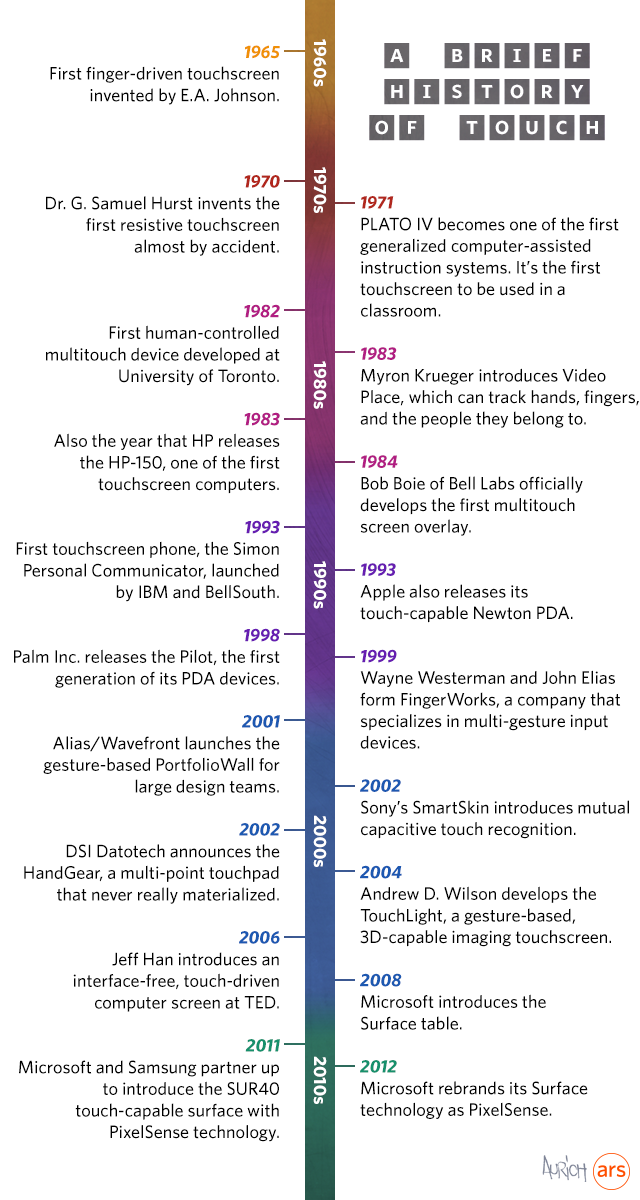

How capacitive touchscreens work.

A capacitive touchscreen panel uses an insulator, like glass, that is coated with a transparent conductor such as indium tin oxide (ITO). The "conductive" part is usually a human finger, which makes for a fine electrical conductor. Johnson's initial technology could only process one touch at a time, and what we'd describe today as "multitouch" was still somewhat a ways away. The invention was also binary in its interpretation of touch—the interface registered contact or it didn't register contact. Pressure sensitivity would arrive much later.

Even without the extra features, the early touch interface idea had some takers. Johnson's discovery was eventually adopted by air traffic controllers in the UK and remained in use until the late 1990s.

1970s: Resistive touchscreens are invented

Although capacitive touchscreens were designed first, they were eclipsed in the early years of touch by resistive touchscreens. American inventor Dr. G. Samuel Hurst developed resistive touchscreens almost accidentally. The Berea College Magazine for alumni described it like this:

Hurst and the research team had been working at the University of Kentucky. The university tried to file a patent on his behalf to protect this accidental invention from duplication, but its scientific origins made it seem like it wasn't that applicable outside the laboratory.

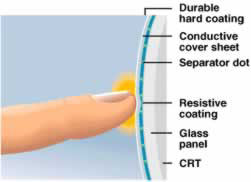

Hurst, however, had other ideas. "I thought it might be useful for other things," he said in the article. In 1970, after he returned to work at the Oak Ridge National Laboratory (ORNL), Hurst began an after-hours experiment. In his basement, Hurst and nine friends from various other areas of expertise set out to refine what had been accidentally invented. The group called its fledgling venture "Elographics," and the team discovered that a touchscreen on a computer monitor made for an excellent method of interaction. All the screen needed was a conductive cover sheet to make contact with the sheet that contained the X- and Y-axis. Pressure on the cover sheet allowed voltage to flow between the X wires and the Y wires, which could be measured to indicate coordinates. This discovery helped found what we today refer to as resistive touch technology (because it responds purely to pressure rather than electrical conductivity, working with both a stylus and a finger).

As a class of technology, resistive touchscreens tend to be very affordable to produce. Most devices and machines using this touch technology can be found in restaurants, factories, and hospitals because they are durable enough for these environments. Smartphone manufacturers have also used resistive touchscreens in the past, though their presence in the mobile space today tends to be confined to lower-end phones.

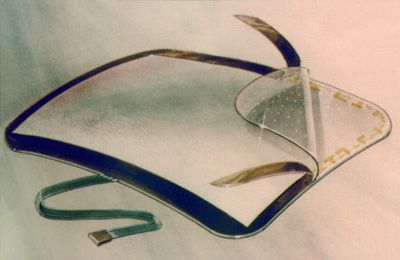

A second-gen AccuTouch curved touchscreen from EloTouch.

Elographics didn't confine itself just to resistive touch, though. The group eventually patented the first curved glass touch interface. The patent was titled "electrical sensor of plane coordinates" and it provided details on "an inexpensive electrical sensor of plane coordinates" that employed "juxtaposed sheets of conducting material having electrical equipotential lines." After this invention, Elographics was sold to "good folks in California" and became EloTouch Systems.

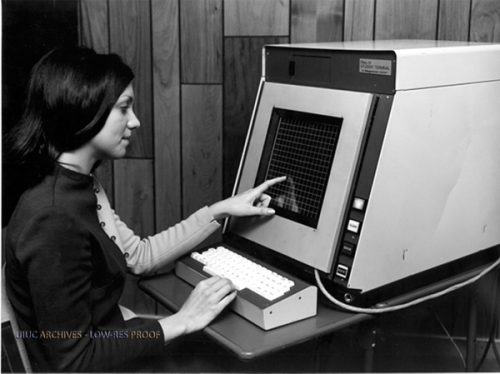

By 1971, a number of different touch-capable machines had been introduced, though none were pressure sensitive. One of the most widely used touch-capable devices at the time was the University of Illinois's PLATO IV terminal—one of the first generalized computer assisted instruction systems. The PLATO IV eschewed capacitive or resistive touch in favor of an infrared system (we'll explain shortly). PLATO IV was the first touchscreen computer to be used in a classroom that allowed students to touch the screen to answer questions.

|

| The PLATO IV touchscreen terminal |

1980s: The decade of touch

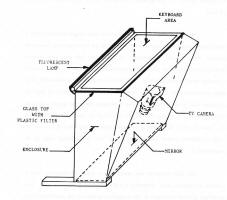

One of the first diagrams depicting multitouch input.

Shortly thereafter, gestural interaction was introduced by Myron Krueger, an American computer artist who developed an optical system that could track hand movements. Krueger introduced Video Place (later called Video Desk) in 1983, though he'd been working on the system since the late 1970s. It used projectors and video cameras to track hands, fingers, and the people they belonged to. Unlike multitouch, it wasn't entirely aware of who or what was touching, though the software could react to different poses. The display depicted what looked like shadows in a simulated space.

Bill Buxton introduces the PortfolioWall and details some of its abilities.

Though it wasn't technically touch-based—it relied on "dwell time" before it would execute an action—Buxton regards it as one of the technologies that "'wrote the book' in terms of unencumbered… rich gestural interaction. The work was more than a decade ahead of its time and was hugely influential, yet not as acknowledged as it should be." Krueger also pioneered virtual reality and interactive art later on in his career.

A diagram (in Spanish!) detailing how the Video Place worked.

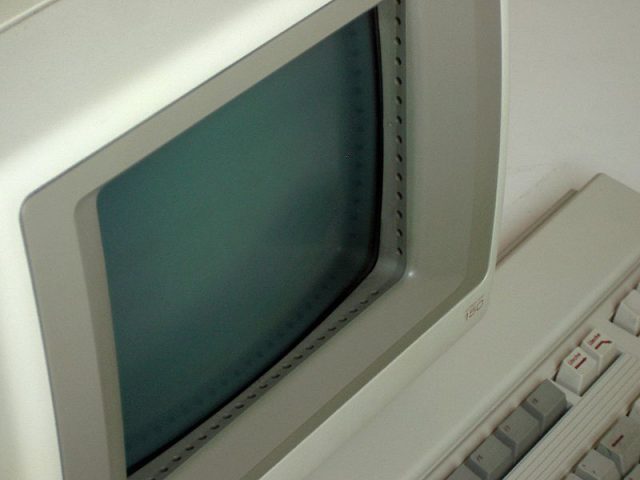

Touchscreens began being heavily commercialized at the beginning of the 1980s. HP (then still formally known as Hewlett-Packard) tossed its hat in with the HP-150 in September of 1983. The computer used MS-DOS and featured a 9-inch Sony CRT surrounded by infrared (IR) emitters and detectors that could sense where the user's finger came down on the screen. The system cost about $2,795, but it was not immediately embraced because it had some usability issues. For instance, poking at the screen would in turn block other IR rays that could tell the computer where the finger was pointing. This resulted in what some called "Gorilla Arm," referring to muscle fatigue that came from a user sticking his or her hand out for so long.

The HP-150 featured MS-DOS and a 9-inch touchscreen Sony CRT.

A year later, multitouch technology took a step forward when Bob Boie of Bell Labs developed the first transparent multitouch screen overlay. As Ars wrote last year:

The discovery helped create the multitouch technology that we use today in tablets and Smartphones.

1990s: Touchscreens for everyone!

IBM's Simon Personal Communicator: big handset, big screen, and a stylus for touch input.

In 1993, IBM and BellSouth teamed up to launch the Simon Personal Communicator, one of the first cellphones with touchscreen technology. It featured paging capabilities, an e-mail and calendar application, an appointment schedule, an address book, a calculator, and a pen-based sketchpad. It also had a resistive touchscreen that required the use of a stylus to navigate through menus and to input data.

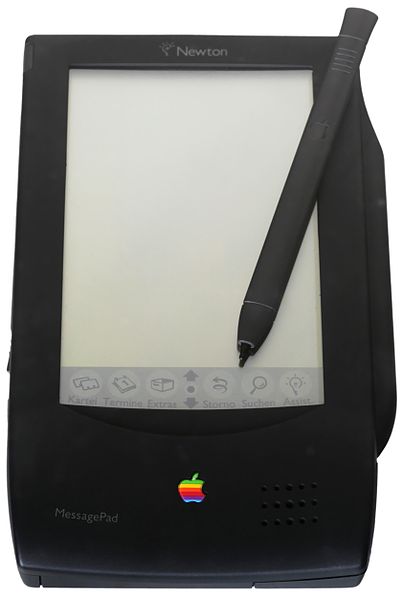

The original MessagePad 100.

Apple also launched a touchscreen PDA device that year: the Newton PDA. Though the Newton platform had begun in 1987, the MessagePad was the first in the series of devices from Apple to use the platform. As Time notes, Apple's CEO at the time, John Sculley, actually coined the term "PDA" (or "personal digital assistant"). Like IBM's Simon Personal Communicator, the MessagePad 100 featured handwriting recognition software and was controlled with a stylus.

Early reviews of the MessagePad focused on its useful features. Once it got into the hands of consumers, however, its shortcomings became more apparent. The handwriting recognition software didn't work too well, and the Newton didn't sell that many units. That didn't stop Apple, though; the company made the Newton for six more years, ending with the MP2000.

The first Palm Pilot.

Three years later, Palm Computing followed suit with its own PDA, dubbed the Pilot. It was the first of the company's many generations of personal digital assistants. Like the other touchscreen gadgets that preceded it, the Palm 1000 and Pilot 5000 required the use of a stylus.

Palm's PDA gadget had a bit more success than IBM and Apple's offerings. Its name soon became synonymous with the word "business," helped in part by the fact that its handwriting recognition software worked very well. Users used what Palm called "Graffiti" to input text, numbers, and other characters. It was simple to learn and mimicked how a person writes on a piece of paper. It was eventually implemented over to the Apple Newton platform.

PDA-type devices didn't necessarily feature the finger-to-screen type of touchscreens that we're used to today, but consumer adoption convinced the companies that there was enough interest in owning this type of device.

Near the end of the decade, University of Delaware graduate student Wayne Westerman published a doctoral dissertation entitled "Hand Tracking, Finger Identification, and Chordic Manipulation on a Multi-Touch Surface." The paper detailed the mechanisms behind what we know today as multitouch capacitive technology, which has gone on to become a staple feature in modern touchscreen-equipped devices.

The iGesture pad manufactured by FingerWorks.

Westerman and his faculty advisor, John Elias, eventually formed a company called FingerWorks. The group began producing a line of multitouch gesture-based products, including a gesture-based keyboard called the TouchStream. This helped those who were suffering from disabilities like repetitive strain injuries and other medical conditions. The iGesture Pad was also released that year, which allowed one-hand gesturing and maneuvering to control the screen. FingerWorks was eventually acquired by Apple in 2005, and many attribute technologies like the multitouch Trackpad or the iPhone's touchscreen to this acquisition.

2000s and beyond

With so many different technologies accumulating in the previous decades, the 2000s were the time for touchscreen technologies to really flourish. We won't cover too many specific devices here (more on those as this touchscreen series continues), but there were advancements during this decade that helped bring multitouch and gesture-based technology to the masses. The 2000s were also the era when touchscreens became the favorite tool for design collaboration.

2001: Alias|Wavefront's gesture-based PortfolioWall

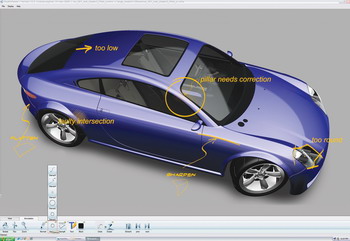

As the new millennium approached, companies were pouring more resources into integrating touchscreen technology into their daily processes. 3D animators and designers were especially targeted with the advent of the PortfolioWall. This was a large-format touchscreen meant to be a dynamic version of the boards that design studios use to track projects. Though development started in 1999, the PortfolioWall was unveiled at SIGGRAPH in 2001 and was produced in part by a joint collaboration between General Motors and the team at Alias|Wavefront. Buxton, who now serves as principal research at Microsoft Research, was the chief scientist on the project. "We're tearing down the wall and changing the way people effectively communicate in the workplace and do business," hesaid back then. "PortfolioWall's gestural interface allows users to completely interact with a digital asset. Looking at images now easily become part of an everyday workflow."

Bill Buxton introduces the PortfolioWall and details some of it abilities.

The PortfolioWall used a simple, easy-to-use, gesture-based interface. It allowed users to inspect and maneuver images, animations, and 3D files with just their fingers. It was also easy to scale images, fetch 3D models, and play back video. A later version added sketch and text annotation, the ability to launch third-party applications, and a Maya-based 3D viewing tool to use panning, rotating, zooming, and viewing for 3D models. For the most part, the product was considered a digital corkboard for design-centric professions. It also cost a whopping $38,000 to get the whole set up installed—$3,000 for the presenter itself and $35,000 for the server.

The PortfolioWall allowed designers to display full-scale 3D models.

The PortfolioWall also addressed the fact that while traditional mediums like clay models and full-size drawings were still important to the design process, they were slowly being augmented by digital tools. The device included add-ons that virtually emulated those tangible mediums and served as a presentation tool for designers to show off their work in progress.

Another main draw of the PortfolioWall was its "awareness server," which helped facilitate collaboration across a network so that teams didn't have to be in the same room to review a project. Teams could have multiple walls in different spaces and still collaborate remotely.

The PortfolioWall was eventually laid to rest in 2008, but it was a prime example of how gestures interacting with the touchscreen could help control an entire operating system.

2002: Mutual capacitive sensing in Sony's SmartSkin

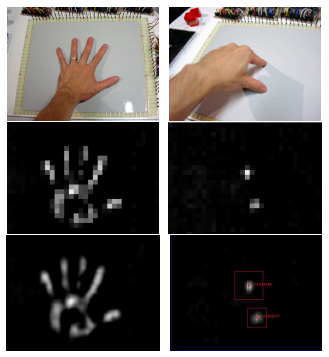

Using the Sony SmartSkin.

In 2002, Sony introduced a flat input surface that could recognize multiple hand positions and touch points at the same time. The company called it SmartSkin. The technology worked by calculating the distance between the hand and the surface with capacitive sensing and a mesh-shaped antenna. Unlike the camera-based gesture recognition system in other technologies, the sensing elements were all integrated into the touch surface. This also meant that it wouldn't malfunction in poor lighting conditions. The ultimate goal of the project was to transform surfaces that are used every day, like your average table or a wall, into an interactive one with the use of a PC nearby. However, the technology did more for capacitive touch technology than may have been intended, including introducing multiple contact points.

How the SmartSkin sensed gestures.

Jun Rekimoto at the Interaction Laboratory in Sony's Computer Science Laboratories noted the advantages of this technology in a whitepaper. He said technologies like SmartSkin offer "natural support for multiple-hand, multiple-user operations." More than two users can simultaneously touch the surface at a time without any interference. Two prototypes were developed to show the SmartSkin used as an interactive table and a gesture-recognition pad. The second prototype used finer mesh compared to the former so that it can map out more precise coordinates of the fingers. Overall, the technology was meant to offer a real-world feel of virtual objects, essentially recreating how humans use their fingers to pick up objects and manipulate them.

2002-2004: Failed tablets and Microsoft Research's TouchLight

A multitouch tablet input device named HandGear.

Multitouch technology struggled in the mainstream, appearing in specialty devices but never quite catching a big break. One almost came in 2002, when Canada-based DSI Datotech developed the HandGear + GRT device (the acronym "GRT" referred to the device's Gesture Recognition Technology). The device's multipoint touchpad worked a bit like the aforementioned iGesture pad in that it could recognize various gestures and allow users to use it as an input device to control their computers. "We wanted to make quite sure that HandGear would be easy to use," VP of Marketing Tim Heaney said in a press release. "So the technology was designed to recognize hand and finger movements which are completely natural, or intuitive, to the user, whether they're left- or right-handed. After a short learning-period, they're literally able to concentrate on the work at hand, rather than on what the fingers are doing."

HandGear also enabled users to "grab" three-dimensional objects in real-time, further extending that idea of freedom and productivity in the design process. The company even made the API available for developers via AutoDesk. Unfortunately, as Buxton mentions in his overview of multitouch, the company ran out of money before their product shipped and DSI closed its doors.

Andy Wilson explains the technology behind the TouchLight.

Two years later, Andrew D. Wilson, an employee at Microsoft Research, developed a gesture-based imaging touchscreen and 3D display. The TouchLight used a rear projection display to transform a sheet of acrylic plastic into a surface that was interactive. The display could sense multiple fingers and hands of more than one user, and because of its 3D capabilities, it could also be used as a makeshift mirror.

The TouchLight was a neat technology demonstration, and it was eventually licensed out for production to Eon Reality before the technology proved too expensive to be packaged into a consumer device. However, this wouldn't be Microsoft's only foray into fancy multitouch display technology.

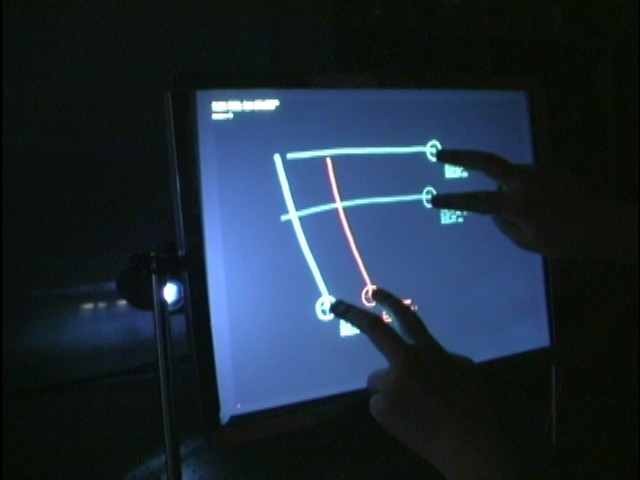

2006: Multitouch sensing through “frustrated total internal reflection”

In 2006, Jeff Han gave the first public demonstration of his intuitive, interface-free, touch-driven computer screen at a TED Conference in Monterey, CA. In his presentation, Han moved and manipulated photos on a giant light box using only his fingertips. He flicked photos, stretched them out, and pinched them away, all with a captivating natural ease. "This is something Google should have in their lobby," he joked. The demo showed that a high-resolution, scalable touchscreen was possible to build without spending too much money.

A diagram of Jeff Han's multitouch sensing used FTIR.

Han had discovered that the "robust" multitouch sensing was possible using "frustrated total internal reflection" (FTIR), a technique from the biometrics community used for fingerprint imaging. FTIRworks by shining light through a piece of acrylic or plexiglass. The light (infrared is commonly used) bounces back and forth between the top and bottom of the acrylic as it travels. When a finger touches down on the surface, the beams scatter around the edge where the finger is placed, hence the term "frustrated." The images that are generated look like white blobs and are picked up by an infrared camera. The computer analyzes where the finger is touching to mark its placement and assign a coordinate. The software can then analyze the coordinates to perform a certain task, like resize or rotate objects.

Jeff Han demonstrates his new "interface-free" touch-driven screen.

After the TED talk became a YouTube hit, Han went on to launch a startup called Perceptive Pixel. A year following the talk, he told Wired that his multitouch product did not have a name yet. And although he had some interested clients, Han said they were all "really high-end clients. Mostly defense."

Last year, Hann sold his company to Microsoft in an effort to make the technology more mainstream and affordable for consumers. "Our company has always been about productivity use cases," Han told AllThingsD. "That's why we have always focused on these larger displays. Office is what people think of when they think of productivity.

2008: Microsoft Surface

Before there was a 10-inch tablet, the name "Surface" referred to Microsoft's high-end tabletop graphical touchscreen, originally built inside of an actual IKEA table with a hole cut into the top. Although it was demoed to the public in 2007, the idea originated back in 2001. Researchers at Redmond envisioned an interactive work surface that colleagues could use to manipulate objects back and forth. For many years, the work was hidden behind a non-disclosure agreement. It took 85 prototypes before Surface 1.0 was ready to go.

As RB wrote in 2007, the Microsoft Surface was essentially a computer embedded into a medium-sized table, with a large, flat display on top. The screen's image was rear-projected onto the display surface from within the table, and the system sensed where the user touched the screen through cameras mounted inside the table looking upward toward the user. As fingers and hands interacted with what's on screen, the Surface's software tracked the touch points and triggered the correct actions. The Surface could recognize several touch points at a time, as well as objects with small "domino" stickers tacked on to them. Later in its development cycle, Surface also gained the ability to identify devices via RFID.

Bill Gates demonstrates the Microsoft Surface.

The original Surface was unveiled at the All Things D conference in 2007. Although many of its design concepts weren't new, it very effectively illustrated the real-world use case for touchscreens integrated into something the size of a coffee table. Microsoft then brought the 30-inch Surface to demo it at CES 2008, but the company explicitly said that it was targeting the "entertainment retail space." Surface was designed primarily for use by Microsoft's commercial customers to give consumers a taste of the hardware. The company partnered up with several big name hotel resorts, like Starwood and Harrah's Casino, to showcase the technology in their lobbies. Companies like AT&T used the Surface to showcase the latest handsets to consumers entering their brick and mortar retail locations.

Surface at CES 2008.

Rather than refer to it as a graphic user interface (GUI), Microsoft denoted the Surface's interface as a natural user interface, or "NUI." The phrase suggested that the technology would feel almost instinctive to the human end user, as natural as interacting with any sort of tangible object in the real world. The phrase also referred to the fact that the interface was driven primarily by the touch of the user rather than input devices. (Plus, NUI—"new-ey"—made for a snappy, marketing-friendly acronym.)

Microsoft introduces the Samsung SUR40.

In 2011, Microsoft partnered up with manufacturers like Samsung to produce sleeker, newer tabletop Surface hardware. For example, the Samsung SUR40 has a 40-inch 1080p LED, and it drastically reduced the amount of internal space required for the touch sensing mechanisms. At 22-inches thick, it was thinner than its predecessors, and the size reduction made it possible to mount the display on a wall rather than requiring a table to house the camera and sensors. It cost around $8,400 at the time of its launch and ran Windows 7 and Surface 2.0 software.

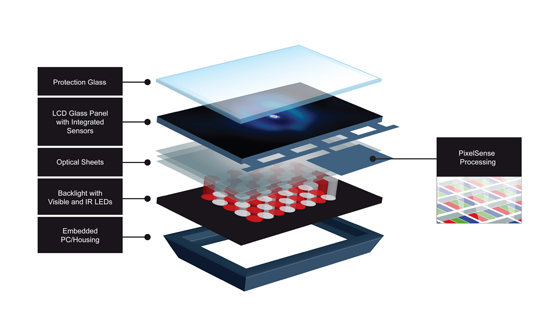

Last year, the company rebranded the technology as PixelSense once Microsoft introduced its unrelated Surface tablet to consumers. The name "PixelSense" refers to the way the technology actually works: a touch-sensitive protection glass is placed on top of an infrared backlight. As it hits the glass, the light is reflected back to integrated sensors, which convert that light into an electrical signal. That signal is referred to as a "value," and those values create a picture of what's on the display. The picture is then analyzed using image processing techniques, and that output is sent to the computer it's connected to.

PixelSense features four main components that make up its technology: it doesn't require a mouse and keyboard to work, more than one user can interact with it at one time, it can recognize certain objects placed on the glass, and it features multiple contact points. The name PixelSense could also be attributed to that last bit especially—each pixel can actually sense whether or not there was touch contact.

Although it would make an awesome living room addition, Microsoft continues to market the Surface hardware as a business tool rather than a consumer product.

Touch today—and tomorrow?

It can't be understated—each of these technologies had a monumental impact on the gadgets we use today. Everything from our smartphones to laptop trackpads and WACOM tablets can be somehow connected to the many inventions, discoveries, and patents in the history of touchscreen technology. Android and iOS users should thank to E.A. Johnson for capacitive touch-capable smartphones, while restaurants may send their regards to Dr. G. Samuel Hurst for the resistive touchscreen on their Point of Sale (POS) system.

In the next part of our series, we'll dive deeper on the devices of today. (Just how has the work of FingerWorks impacted those iDevices anyway?) But history did not end with 2011, either. We'll also discuss how some of the current major players—like Apple and Samsung—continue contributing to the evolution of touchscreen gadgets. Don't scroll that finger, stay tuned!

No comments:

Post a Comment

Let us know your Thoughts and ideas!

Your comment will be deleted if you

Spam , Adv. Or use of bad language!

Try not to! And thank for visiting and for the comment

Keep visiting and spread and share our post !!

Sharing is a kind way of caring!! Thanks again!